Understanding Few-Shot Learning: Definition, Evolution, and Applications

Explore few shot learning, a transformative AI approach enabling models to learn with minimal data.

Key Highlights:

- Few-shot learning (FSL) enables models to learn from a minimal number of labelled examples, typically 5 to 10, contrasting with traditional methods that require extensive datasets.

- FSL is significant in areas with scarce data, allowing for faster training and reflecting human cognitive abilities in concept acquisition.

- Key domains benefiting from FSL include natural language processing (NLP), computer vision, and robotics.

- FSL enhances NLP tasks such as text categorization and sentiment analysis with limited training data, reducing resource needs.

- The evolution of FSL is linked to advancements in meta-learning, enabling systems to learn how to learn and adapt quickly to new tasks.

- Landmark studies, such as Li et al. in 2006, laid the groundwork for systems to leverage prior knowledge for rapid adaptation.

- Recent advancements in large language models like GPT-3 and GPT-4 demonstrate remarkable few-shot learning capabilities.

- Methodologies in FSL include meta-learning, transfer techniques, and data augmentation, enhancing model performance with limited data.

- Applications of FSL span healthcare for rare disease identification, finance for fraud detection, and NLP for improving language model tasks.

- Ongoing research addresses challenges like overfitting and aims to enhance the precision of few-shot models compared to fully-supervised techniques.

Introduction

Few-shot learning (FSL) is revolutionising the field of artificial intelligence by empowering machines to learn from a mere handful of examples—often as few as five to ten. This capability mirrors human cognitive processes and addresses the pressing need for efficient data utilisation in an era where data acquisition can be both challenging and costly.

As organisations strive to harness AI's potential, the question arises: how can few-shot learning reshape the landscape of machine intelligence? Moreover, how can it overcome the inherent limitations of traditional training methods? The answers to these questions may redefine our approach to machine learning.

Define Few-Shot Learning and Its Significance in AI

Few shot learning (FSL) represents a transformative paradigm in machine intelligence, enabling models to acquire knowledge from a minimal number of labelled examples—typically around 5 to 10. This approach stands in stark contrast to conventional machine teaching methods, which often require extensive datasets for effective training. The ability to generalise from limited examples is particularly advantageous in scenarios where data is scarce or costly to obtain. This capability not only streamlines the training process but also reflects human cognitive abilities, allowing individuals to grasp new concepts with minimal exposure. A common analogy illustrating this concept is the identification of a rare bird after viewing only a few images, highlighting the challenges faced by traditional methods compared to limited-sample techniques.

The significance of few shot learning extends across multiple domains, including:

- natural language processing (NLP)

- computer vision

- robotics

For instance, in NLP, limited example training empowers systems to perform tasks such as text categorization and sentiment analysis with just a few instances, drastically reducing the time and resources needed for data collection and labelling. This adaptability is vital in fast-paced fields where the availability of labelled data can become a bottleneck. Furthermore, limited-sample training is intricately linked to meta-training, equipping models to swiftly adapt to new tasks by leveraging prior knowledge from related tasks.

Insights from specialists underscore the importance of minimal data training in enhancing the efficacy and adaptability of AI systems. By addressing the data dependency challenge inherent in traditional machine intelligence, limited-sample training not only lowers operational costs but also accelerates the deployment of AI solutions in real-world applications. As organisations increasingly aim to integrate AI capabilities into their operations, the relevance of few shot learning continues to grow, positioning it as a key driver of innovation within the AI landscape.

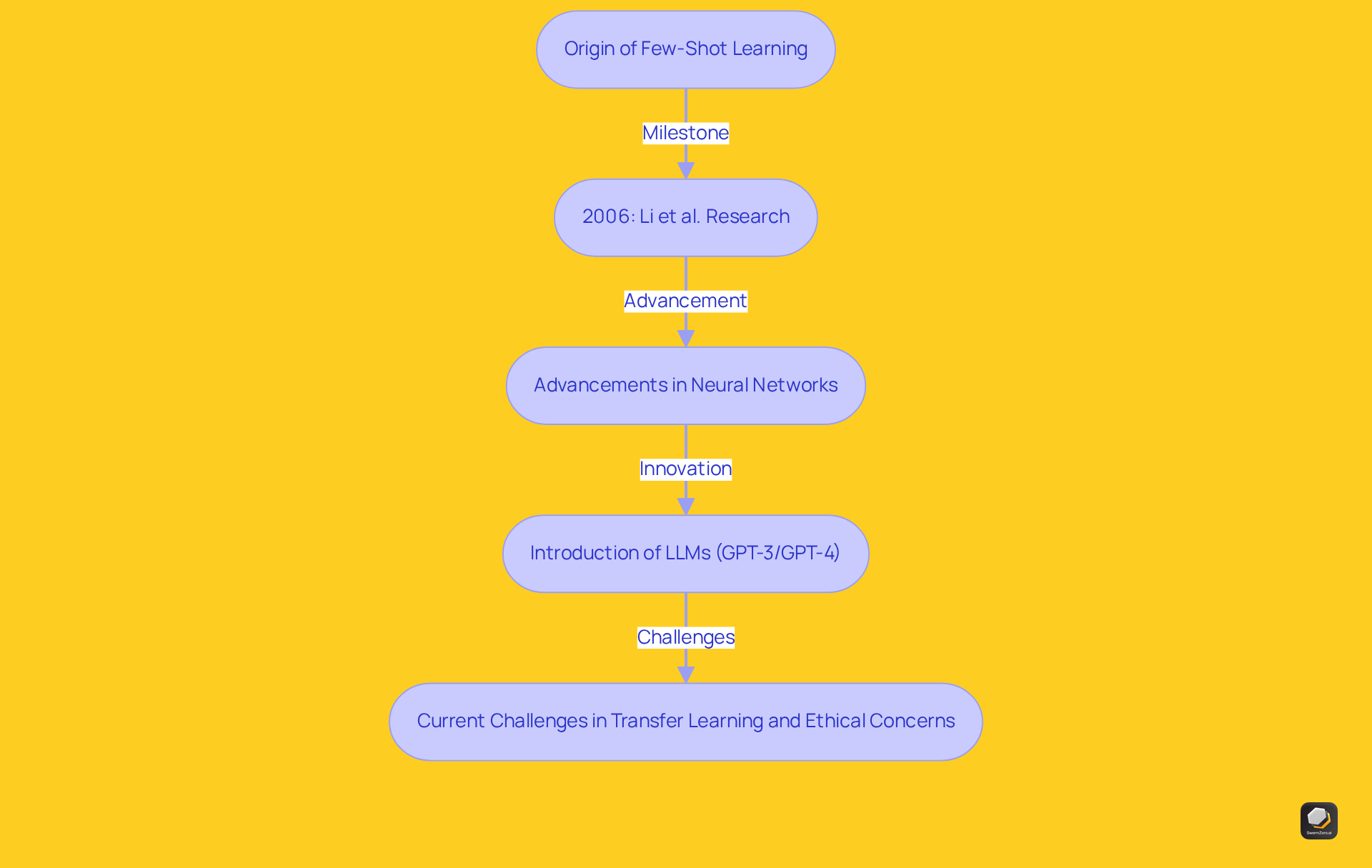

Trace the Evolution and Historical Context of Few-Shot Learning

Few shot learning has emerged within the broader context of machine intelligence as researchers strive to overcome the limitations of traditional supervised training, which often necessitates extensive labelled datasets. This concept's evolution is intricately tied to advancements in meta-learning techniques, emphasising the necessity for systems to learn how to learn.

A landmark achievement in this trajectory was the work by Li et al. in 2006, which laid the groundwork for rapid adaptation by enabling systems to leverage prior knowledge to adjust to new tasks with minimal data. As neural networks and deep learning technologies have progressed, they have significantly enhanced the capabilities of limited-sample training systems, fostering innovative approaches that improve performance across a variety of real-world applications.

Notably, advancements in large language models (LLMs) such as OpenAI's GPT-3 and GPT-4 have showcased remarkable abilities in few shot learning, demonstrating their capacity to perform diverse tasks with limited examples.

Nevertheless, challenges persist, particularly in few-shot learning across different domains, where transferring knowledge can prove problematic. Moreover, ethical concerns regarding biases in training data continue to pose significant hurdles in the development and implementation of AI systems.

This evolution illustrates a growing understanding of how to effectively utilise restricted information, paving the way for more adaptable and intelligent AI systems.

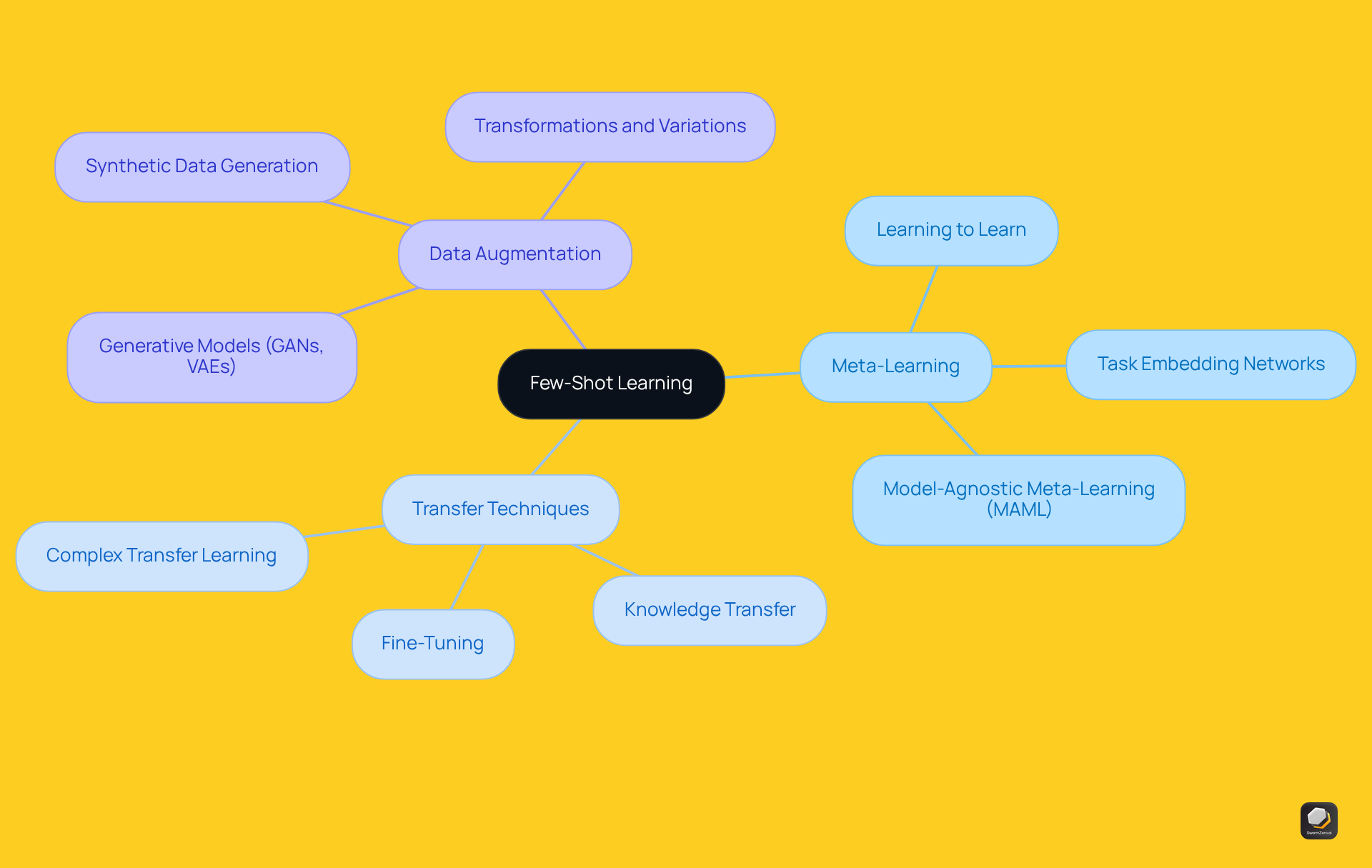

Examine Key Characteristics and Methodologies of Few-Shot Learning

Few shot learning encompasses essential techniques such as meta-education, transfer techniques, and information augmentation. Meta-learning, often termed 'learning to learn,' equips systems to adapt swiftly to new tasks with minimal data by training them across diverse tasks. Conversely, transfer knowledge enables systems to leverage insights from one task to enhance performance on another, effectively bridging gaps in data availability. Additionally, data augmentation techniques enrich the training dataset, fostering greater diversity and improving model generalisation. These methodologies converge to create robust systems that achieve remarkable precision through few shot learning, even with limited data.

For instance, metric-based methods apply similarity measures to categorise new examples by assessing their proximity to known instances, showcasing the practical effectiveness of few shot learning across various applications. However, challenges such as overfitting remain prevalent, necessitating strategies like regularisation and transfer techniques to mitigate these issues. Ongoing research aims to enhance the precision of limited-sample models in comparison to fully-supervised techniques, with advancements involving self-supervised methods and cross-attention networks that augment model adaptability.

Applications of few shot learning span multiple fields, including robotics, where it facilitates skill acquisition from minimal demonstrations, and natural language processing, where it enhances tasks such as sentiment analysis and text classification.

Explore Practical Applications of Few-Shot Learning in Various Domains

Few shot learning has found applications across multiple domains, including healthcare, finance, and natural language processing.

- In healthcare, minimal data training methods are employed to identify rare diseases, educating systems on limited patient information. This facilitates faster and more precise evaluations.

- In finance, these systems assist in fraud detection by analysing a restricted number of fraudulent transactions to recognise patterns indicative of fraud.

- Furthermore, in natural language processing, limited-sample training enhances language models' performance on specific tasks, such as sentiment analysis or intent recognition, utilising minimal labelled examples.

These applications highlight the versatility and effectiveness of few-shot learning in addressing real-world challenges, reinforcing its importance as a critical area of research and development in AI.

Conclusion

Few-shot learning signifies a pivotal evolution in artificial intelligence, empowering systems to learn efficiently from a limited number of examples. This groundbreaking approach not only contrasts with traditional methods that heavily depend on extensive datasets but also mirrors human cognitive functions, enabling swift adaptation to new tasks with minimal training data. As AI continues to advance, few-shot learning emerges as a crucial element that boosts the efficiency and adaptability of machine learning systems.

This article has underscored the importance of few-shot learning across diverse domains, including natural language processing, computer vision, and robotics. Key methodologies such as:

- Meta-learning

- Transfer techniques

- Data augmentation

have been explored, demonstrating their roles in enhancing model performance and generalisation. Furthermore, the practical applications in healthcare, finance, and other sectors highlight the transformative potential of few-shot learning in addressing real-world challenges.

With the rising demand for agile and intelligent AI systems, adopting few-shot learning becomes increasingly vital. By leveraging this method, organisations can lower operational costs and expedite the deployment of AI solutions, ultimately fostering innovation. The ongoing research and development in this field promise to unveil even greater capabilities, positioning few-shot learning as a cornerstone of future advancements in artificial intelligence.

Frequently Asked Questions

What is few-shot learning (FSL)?

Few-shot learning (FSL) is a machine learning paradigm that enables models to learn from a minimal number of labelled examples, typically around 5 to 10, contrasting with traditional methods that require extensive datasets for effective training.

Why is few-shot learning significant in AI?

Few-shot learning is significant because it allows models to generalise from limited examples, making it advantageous in scenarios where data is scarce or costly to obtain. It streamlines the training process and reflects human cognitive abilities, enabling quick understanding of new concepts.

In which domains is few-shot learning applicable?

Few-shot learning is applicable across multiple domains, including natural language processing (NLP), computer vision, and robotics.

How does few-shot learning benefit natural language processing (NLP)?

In NLP, few-shot learning allows systems to perform tasks such as text categorization and sentiment analysis with just a few examples, significantly reducing the time and resources needed for data collection and labelling.

What is the relationship between few-shot learning and meta-training?

Few-shot learning is closely linked to meta-training, which equips models to quickly adapt to new tasks by leveraging prior knowledge from related tasks, enhancing their adaptability.

What are the advantages of limited-sample training for AI systems?

Limited-sample training enhances the efficacy and adaptability of AI systems by addressing the data dependency challenge of traditional machine intelligence, lowering operational costs, and accelerating the deployment of AI solutions in real-world applications.

How is few-shot learning positioned in the future of AI?

As organisations increasingly aim to integrate AI capabilities into their operations, few-shot learning is becoming more relevant and is positioned as a key driver of innovation within the AI landscape.

List of Sources

- Define Few-Shot Learning and Its Significance in AI

- Meta's New AI System to Help Tackle Harmful Content | Meta Newsroom (https://about.fb.com/news/2021/12/metas-new-ai-system-tackles-harmful-content)

- Few-Shot Learning: A Breakthrough in AI That Learns from Just a Few Examples (https://medium.com/data-science-collective/few-shot-learning-a-breakthrough-in-ai-that-learns-from-just-a-few-examples-4c5192ae9eb4)

- Unlocking the Potential of Few-Shot Learning: Enhancing AI's Adaptability with Limited Data (https://yourstory.com/2023/06/ai-shot-learning-efficient-knowledge-transfer)

- Unleashing the Power of Few Shot Learning (https://analyticsvidhya.com/blog/2023/07/few-shot-learning)

- Understanding the significance of few-shot learning (https://indiaai.gov.in/article/understanding-the-significance-of-few-shot-learning)

- Trace the Evolution and Historical Context of Few-Shot Learning

- Advancements in Zero-Shot and Few-Shot Learning for Large Language Models (https://goml.io/blog/advancements-in-zero-shot-and-few-shot-learning-for-large-language-models)

- Google DeepMind Unveils New Approach to Meta-Learning (https://opendatascience.com/google-deepmind-unveil-new-approach-to-meta-learning)

- Team-knowledge distillation for multiple cross-domain, few-shot learning (https://techxplore.com/news/2023-05-team-knowledge-distillation-multiple-cross-domain-few-shot.html)

- From Past to Present: The Evolution of AI and the Rise of Meta-Learning. (https://medium.com/@ahmedehabessa/from-past-to-present-the-evolution-of-ai-and-the-rise-of-meta-learning-0059e5d5a3fa)

- Examine Key Characteristics and Methodologies of Few-Shot Learning

- Few-Shot Learning: Methods & Applications in 2025 (https://research.aimultiple.com/few-shot-learning)

- Everything you need to know about Few-Shot Learning | DigitalOcean (https://digitalocean.com/community/tutorials/few-shot-learning)

- Few-shot learning: key methodologies and applications (https://telnyx.com/learn-ai/few-shot-learning)

- What Is Few-Shot Learning? | IBM (https://ibm.com/think/topics/few-shot-learning)

- What is Few-Shot Learning? Strategies and Examples (https://blog.roboflow.com/few-shot-learning)

- Explore Practical Applications of Few-Shot Learning in Various Domains

- Few-Shot Learning: Methods & Applications in 2025 (https://research.aimultiple.com/few-shot-learning)

- Mastering AI Data Efficiency With Active, Zero-Shot, and Few-Shot Learning (https://ecinnovations.com/blog/mastering-ai-data-efficiency-with-active-zero-shot-and-few-shot-learning)

- Unleashing the Power of Few Shot Learning (https://analyticsvidhya.com/blog/2023/07/few-shot-learning)

- How can few-shot learning be used for fraud detection? (https://milvus.io/ai-quick-reference/how-can-fewshot-learning-be-used-for-fraud-detection)

- Few shot learning for phenotype-driven diagnosis of patients with rare genetic diseases (https://medrxiv.org/content/10.1101/2022.12.07.22283238v3.full)